Hi there, I'm

TEJAS KALSAIT

AI and Robotics EngineerCurrently working as a Generative AI Read team analyst @Google (via Vaco) and ex Autonomous Driving Researcher @SUNY Buffalo

Hi there, I'm

AI and Robotics EngineerCurrently working as a Generative AI Read team analyst @Google (via Vaco) and ex Autonomous Driving Researcher @SUNY Buffalo

Hey there!

As a Generative AI Red Team Analyst at Google (via Vaco), I'm validating and testing the Gemini model and other AI products to ensure their safety and reliability. Previously, I worked as an Autonomous Driving Research Assistant at the State University of New York, where I developed cutting-edge digital twins that replicated real-world scenarios. With a Master's degree in Robotics and Computer Science, I bring a unique combination of theoretical knowledge and hands-on experience in machine learning, deep learning, and artificial intelligence, which has enabled me to launch two successful generative AI products in the past year. I'm excited to collaborate on innovative projects and explore new ideas, so feel free to reach out if you'd like to build something awesome together, have a job offer for me, or just chat about the latest developments in AI!My

● As a Generative AI Red Team Analyst at Google (via Vaco), I'm validating and testing the Gemini model and other AI products to ensure their safety and reliability.

● Spearheaded development of a high-fidelity Digital-Twin and Co-Simulator for SUNY Buffalo‘s autonomous vehicle research. Created an automated pipeline to build detailed virtual worlds using cameras and depth estimation techniques, enhancing algorithm testing efficiency by 30% and enabling testing against edge cases.

● Developed automated cross-sensor calibration pipeline for various sensors on ego-vehicle including camera-LIDAR extrinsic calibration improving object detection and tracking reliability by 30%.

● Collaborated with cross-functional teams to integrate ego vehicle's GNSS and LIDAR sensors, deploying NDT scan matching algorithm, resulting in 15% improvement in vehicle localization accuracy.

● Engineered high-precision Autoware maps (lanelet2) with 1.5cm accuracy by processing gigabytes of LIDAR point clouds, leading to enhanced testing accuracy on real vehicles by 20%.

My first internship in the computer science domain while managing 4 courses of Electronics and Telecommunication engineering on the side.

Key Contributions:

● Constructed a PvP racing game for iOS and Android platforms using C# and Unity Engine, leading to over 1,000 downloads within first month of launch. Designed scalable systems for real-time player communication.

My

● Pioneered comprehensive implementation of GPT-2 large language model with 124 million parameters, from ground up using only Python and PyTorch, trained on 100 billion tokens from fineweb-edu dataset.

● Outperformed the pretrained GPT-2 model from OpenAI on HuggingFace by 12% on the Hellaswag benchmark. Achieved results comparable to the GPT-3 model by finetuning and applying modern techniques to GPT-2.

● Developed a DeepDream web application to generate artistic images using gradient ascent on VGG and ResNet50 feature maps, producing visually appealing images from any input, resulting in 1,500+ produced artworks.

● Deployed application using Streamlit, achieving 60+ user sessions in first week and more than 300 users to date.

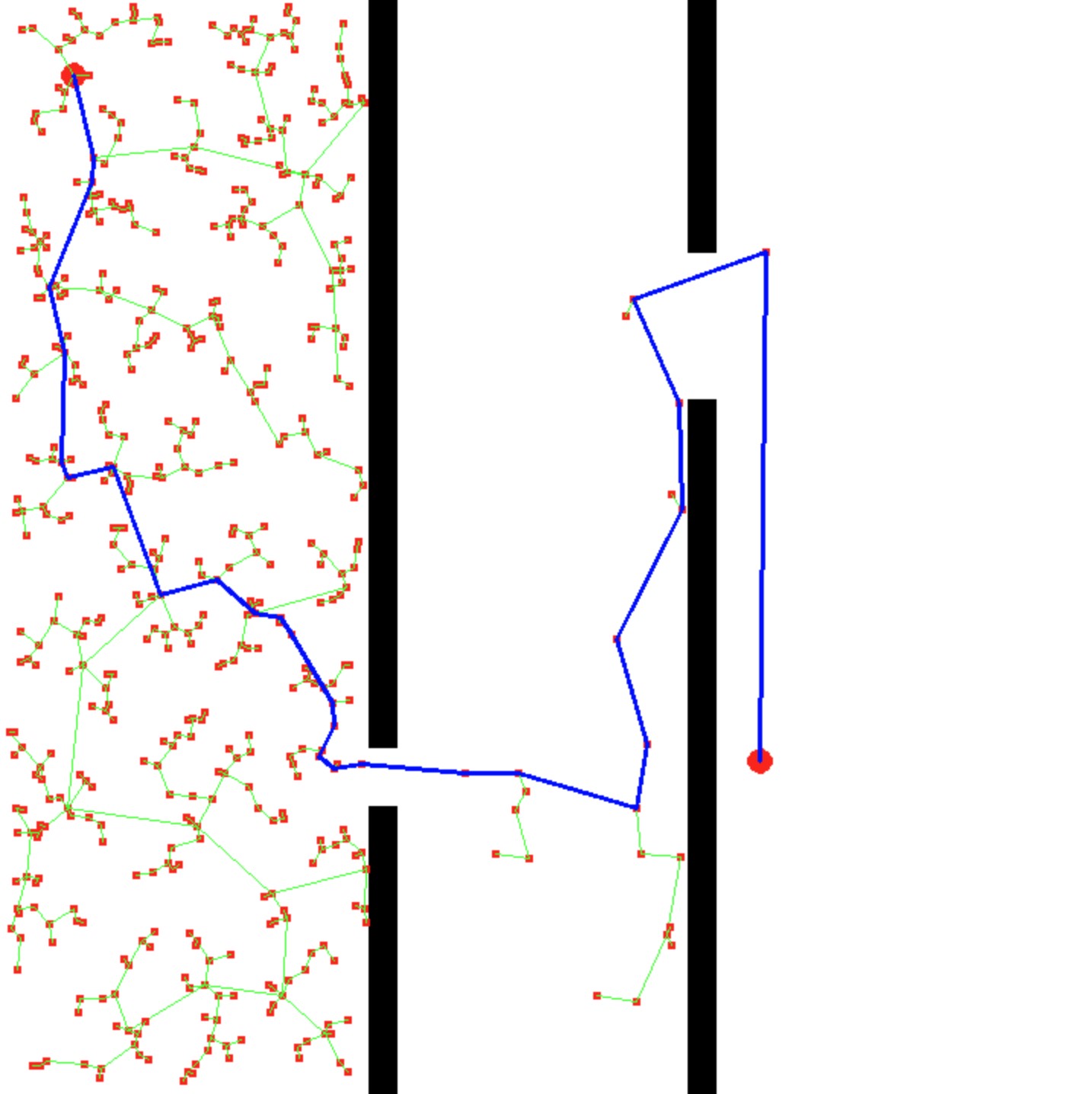

● Designed a Python-based Rapidly Exploring Random Tree (RRT) algorithm based on graph theory establishing feasible shortest paths for robot navigation in obstacle-filled environments, reducing navigation time by 15%.

● Validated the RRT algorithm's performance via simulations in Gazebo and ROS, attaining a 98% success rate in obstacle navigation tests.

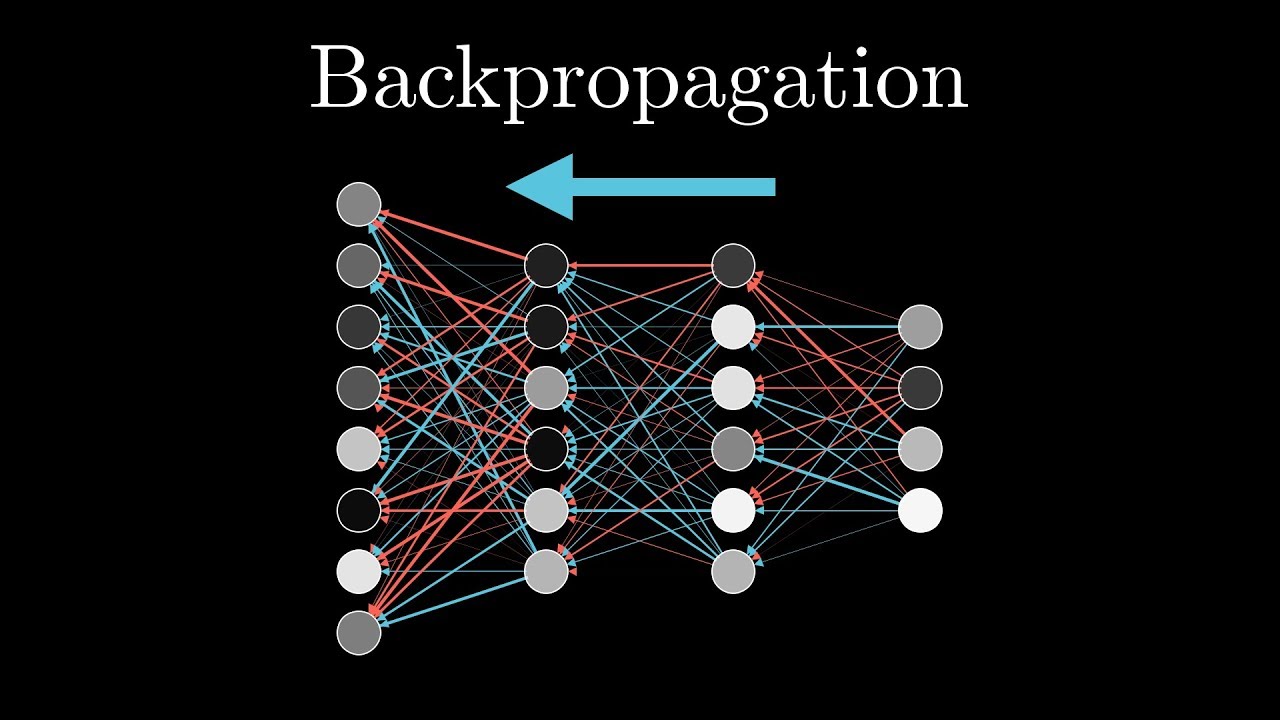

● Launched a mini auto gradient and neural network software with PyTorch-like functionalities, enabling detailed exploration and understanding of gradient computations and neural network mechanics with visualization.

● Implemented automatic differentiation and neural network libraries, allowing for creation and training of models from scratch with 10+ customizable layers, 5+ activation functions, and robust gradient computation methods.

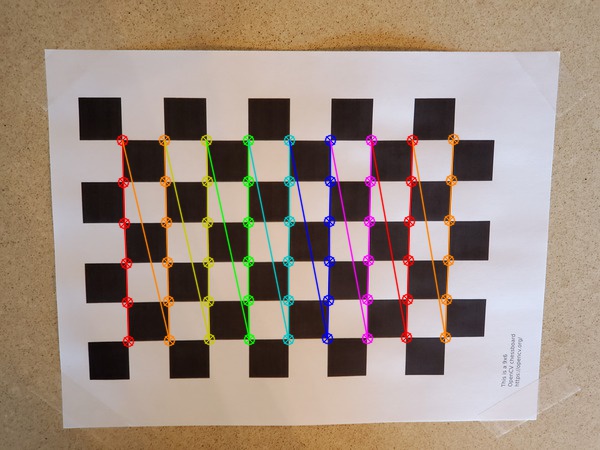

● The "Bird's Eye View" project for autonomous cars is a cutting-edge technology that employs advanced aerial perspective and imaging techniques to enhance the capabilities of self-driving vehicles. The model learns the spacial features and transforms the image into a different plane by learning a Homography Matrix.

● Calibrated the camera using the checkboard method for computer vision tasks to get the relation between 3D world point and 2D image point by calculating the Projection matrix.

● Developed automated cross-sensor calibration pipeline for various sensors on ego-vehicle including camera-LIDAR extrinsic calibration improving object detection and tracking reliability by 30%.

My

PROGRAMMING LANGUAGES

ARTIFICIAL INTELLIGENCE

ROBOTICS

COMPUTER VISION

DEVELOPER TOOLS

My

Coursework -

• Data Structures and Algorithms

• Deep Learning

• Reinforcement Learning

• Robotics Algorithms

• Computer Vision

Coursework -

• Programming

• Data Compression

• Neural Networks

Have a project? Want to hire me? or just looking to reach out?

Feel free to reach out if you just want to connect or see if we can build something amazing together.